Contact centers generate massive amounts of customer data every day—but knowing how well you truly perform requires more than internal reports. Leaders need objective, industry-wide insight to understand where they stand, what to improve, and how to stay competitive.

That’s exactly what the Contact Center Auto QA Benchmark Snapshot from SQM Group is designed to deliver. By blending automated quality assurance, customer satisfaction measurement, and industry benchmarking, this snapshot provides a clear, data-backed view of real performance—compared directly to hundreds of peer organizations.

In this blog, we’ll explore what the Auto QA Benchmark measures, why its accuracy matters, how benchmarking changes performance management, and how contact centers use these insights to drive measurable improvements in CSAT, QA, and First Call Resolution (FCR).

1. Is Traditional QA Broken—and Does It Really Improve CSAT?

For years, traditional call monitoring has been the foundation of contact center quality programs. Supervisors score a small sample of calls and use that feedback to coach agents. While this approach helped establish basic quality standards, today’s contact centers operate at a scale and complexity that traditional QA was never designed to handle.

When only 1–2% of calls are evaluated, leaders are left making decisions based on a very limited view of performance. As customer expectations rise and interaction volumes grow, many organizations are questioning whether traditional QA alone can still reflect what customers truly experience—or reliably drive CSAT improvement

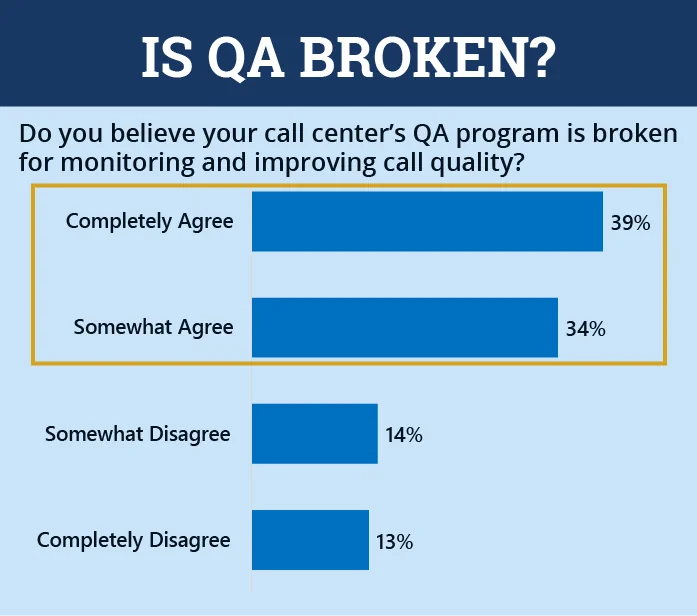

How Leaders Currently View Traditional QA

Many contact center leaders recognize the limitations of manual QA but still rely on it as their primary quality tool. Small call samples, delayed feedback, and subjective scoring often lead to blind spots in performance visibility. Leaders often sense that something isn’t working—but they lack the data to pinpoint what or why with confidence.

This is where doubt begins to grow around whether traditional QA alone can truly support modern CX expectations. SQM’s research shows that 73% of contact center professionals agree that QA is broken.

Does Traditional QA Actually Drive CSAT Improvement?

Even when agents score well on traditional QA checklists, customer outcomes don’t always match. A call may meet internal standards yet still leave the customer confused or dissatisfied. Without full interaction coverage and direct connection to customer perception, it becomes difficult to clearly link QA performance to CSAT improvement.

Interestingly, SQM’s research shows that only 51% of contact center professionals are satisfied that their QA program helps them improve their customer satisfaction.

This growing gap is what has accelerated the shift toward auto QA, but not all automated QA is created equal. SQM’s Auto QA provides both benchmarking and predictive customer satisfaction measurement, better than anything else in the market. Our benchmark snapshot allows you to not only understand and benchmark your current call center performance but also lays out the path for transforming your entire QA program to a powerful auto QA solution.

2. What Is the Auto QA Benchmark Snapshot—and Why Is It So Valuable?

The Auto QA Benchmark Snapshot is a structured, research-backed evaluation designed to show how a contact center’s service quality and customer satisfaction truly compare to industry standards. It does not rely on guesswork, instead it uses statistically valid data to paint a complete and accurate picture.

Using Generative AI (GenAI), SQM evaluates 400 recorded calls and 400 corresponding post-call surveys to examine both what took place during the interaction and how the customer felt afterward. This balanced approach ensures that performance is measured from both the operational and customer perspectives.

In addition, mySQM leverages GenAI to analyze customer conversations, delivering accurate, benchmarkable QA scores and predictive CSAT results with up to 95% accuracy, matching post-call survey results.

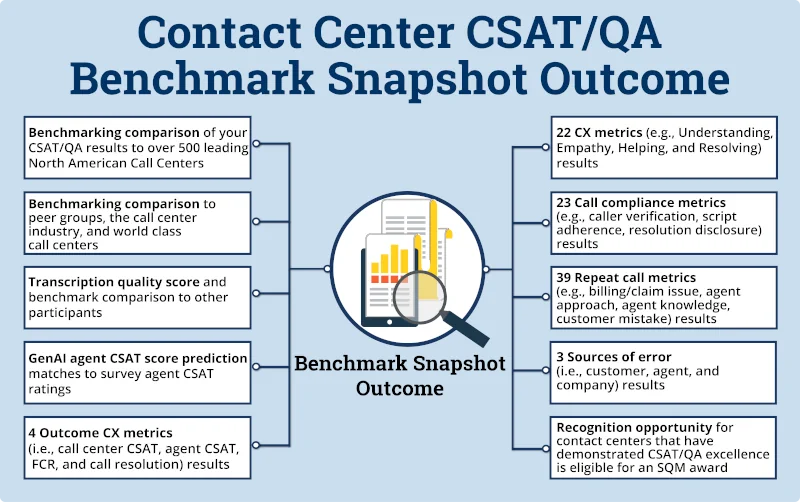

3. What Can You Expect from SQM’s Auto QA Benchmark Snapshot?

The CSAT/QA Benchmark Snapshot outcome provides a comprehensive benchmark comparison against over 500 North American call centers—covering CX, compliance, repeat call metrics, error sources, transcription quality, GenAI CSAT predictions, and recognition through SQM’s Excellence Awards.

- Benchmark comparison of your CSAT/QA results to over 500 leading North American call centers

- Benchmarking comparison to peer groups, the call center industry, and world class call centers

- Transcription quality score and benchmark comparison to other participants

- GenAI agent CSAT score prediction matches to survey agent CSAT ratings

- 4 Outcome CX metrics (i.e., call center CSAT, agent CSAT, FCR, and call resolution) results

- 22 CX metrics (e.g., Understanding, Empathy, Helping, and Resolving) results

- 23 Call compliance metrics (e.g., caller verification, script adherence, resolution disclosure) results

- 39 Repeat call metrics (e.g., billing/claim issue, agent approach, agent knowledge, customer mistake) results

- 3 Sources of error (i.e., customer, agent, and company) results

- Recognition opportunity for contact centers that have demonstrated CSAT/QA excellence is eligible for an SQM award

4. How Accurate Is the Auto QA Benchmark—and Why Does It Matter?

Benchmarking is only valuable if leaders can trust the results. Since 1996, SQM’s CSAT & QA Benchmark Snapshot has delivered CSAT prediction accuracy of up to 95%, giving organizations confidence that the data reflects what customers actually experience—not just what internal scorecards show. SQM is considered the gold standard for measuring and benchmarking customer satisfaction.

This level of accuracy matters because contact center leaders make high-impact decisions based on these insights—staffing, coaching, investments, and performance goals. When the data is reliable, those decisions become clearer and more defensible.

Accurate benchmarking also builds confidence across the organization. Supervisors trust the coaching, agents trust the feedback, and leadership trusts that improvement efforts are grounded in reality—not guesswork.

The GenAI CSAT score may be higher than the survey CSAT score—and that difference is meaningful. GenAI does not penalize agents for things outside their control, such as system outages, policy limits, or long wait times. Instead, it measures what the agent actually influenced during the interaction.

GenAI produces a fairer and more precise view of true service quality and allows agents to understand their current performance as well as their improvement opportunities.

Typically, SQM’s GenAI agent CSAT score prediction matches the agent survey CSAT score at a 90% or greater level.

As Mike Desmarais, Founder & CEO of SQM, explains:

“Auto QA GenAI is more accurate than doing post-call surveys for measuring customer experience.”

5. How Your Contact Center Compares to the Industry

One of the most powerful elements of the Auto QA Benchmark Snapshot is the ability to compare your contact center’s performance against 500+ leading contact centers across North America. Internal reporting may show progress—but benchmarking shows where you truly stand.

This external comparison highlights where your center outperforms peers and where improvement opportunities exist that may not be visible through internal metrics alone. Leaders gain a clear understanding of how their QA, CSAT, and resolution performance rank against top-performing organizations.

These insights remove uncertainty from performance management. Instead of asking, “Are we doing well?”, leaders can ask, “How do we compare?”—and receive a data-backed answer that guides smarter strategic and coaching decisions.

This competitive context is especially valuable during periods of change—such as new product launches, seasonal volume spikes, or staffing shifts. Leaders can quickly determine whether performance dips are isolated to their contact center or part of a broader industry trend, allowing for faster and more confident response planning.

6. Turning Benchmark Insights Into Measurable Improvement

Benchmarking is only valuable when it leads to action. The Contact Center Auto QA Benchmark Snapshot translates industry comparisons into clear improvement opportunities by showing where performance differs from best-in-class contact centers.

These insights help leaders focus on the changes that create the greatest impact—whether related to coaching priorities, process improvements, or agent development strategies. Instead of spreading efforts across dozens of initiatives, benchmarking points directly to where improvement will deliver the fastest and most measurable gains.

For example: leaders may discover that they have significantly more “agent sources of error,” compared to “organizational or customer sources of error” or how they stack up against their industry peers for CSAT and FCR.

7. Why SQM’s Auto QA Benchmark Is Different From Anything Else in the Industry

SQM’s Auto QA Benchmark is built on a QA–CXM hybrid model, which directly links agent performance data to real customer experience outcomes. Most QA programs only show whether procedures were followed. SQM’s model shows whether those actions actually produced a positive customer experience.

Traditional post-call surveys measure how customers feel after an interaction, but they do not always reflect what truly happened during the call. SQM’s GenAI evaluates the entire conversation itself, measuring agent communication, accuracy, empathy, and resolution behavior.

By combining QA and CX into one performance model, SQM’s Auto QA Benchmark shows:

- How agent behavior truly affects customer satisfaction

- Where performance strengths and gaps really exist

- How service quality compares to top-performing contact centers

- Why internal QA scores and customer experience results do not always align

This is what makes SQM’s Auto QA Benchmark fundamentally different, it does not just measure quality or sentiment in isolation. It connects the two, creating a clear, defensible view of real customer experience performance.

The Clarity Leaders Need to Compete—and Win

The Contact Center Auto QA Benchmark Snapshot gives contact center leaders something internal reporting alone cannot: industry-level clarity. It shows where performance truly stands, what drives customer outcomes, and where the greatest improvement opportunities exist.

By combining statistically valid measurement, predictive CSAT accuracy, and industry wide benchmarking, SQM equips contact centers with the insight needed to move from assumption to certainty. When leaders understand how they compare—and why—they can coach smarter, operate more efficiently, and deliver better customer experiences.

For organizations exploring automation, SQM is offering a complimentary 14-day risk-free Auto QA Trial, valued at $75K. Validate results using your own QA data before making a long-term commitment.

"There's no better way to assess Auto QA than with your own calls.”

- Mike Desmarais, Founder & CEO, SQM