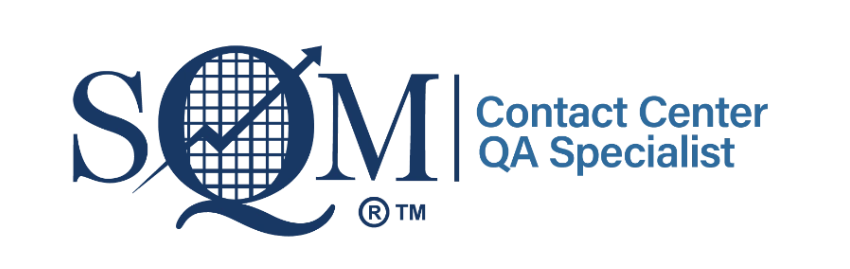

Contact Center CSAT/QA Benchmark Snapshot – At a Glance

SQM’s Contact Center Auto QA Benchmark Snapshot provides a current, data-driven assessment of your contact center’s customer satisfaction (CSAT) and quality assurance (QA) performance using SQM’s standardized customer experience (CX), compliance, and repeat call metrics.

Leveraging Generative AI (GenAI), we evaluate a statistically valid sample of 400 recorded calls and conduct 400 corresponding post-call surveys (via email or phone) to accurately evaluate and deliver actionable insights that drive CSAT and compliance improvement.

Since 1996, SQM’s CSAT & QA Benchmark Snapshot has set the industry standard for measuring and predicting CSAT with up to 95% accuracy, benchmarking QA and CX performance against over 500 leading contact centers, and awarding organizations that achieve outstanding CSAT results.

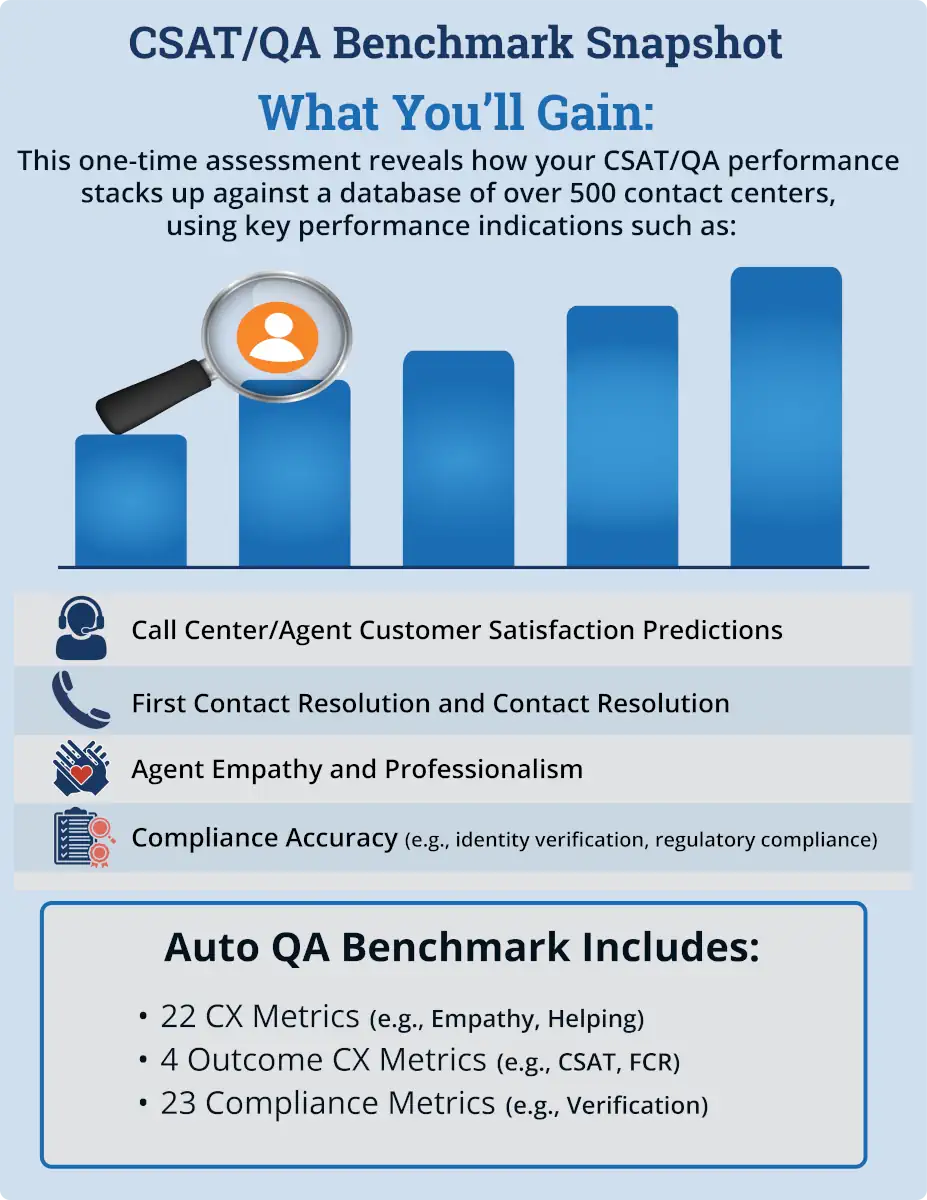

The QA Scoring Framework

The QA Scoring Framework is a step-by-step process that ingests and preprocesses call data (including transcription, speaker separation, and redaction), applies intent and behavior detection (via NLU, sentiment analysis, and behavioral tagging), scores performance using rule-based rubrics, LLM evaluation, CSAT prediction, and call calibration, and then aggregates results into composite QA scores with benchmarking against peers, industry, and world-class contact centers.

Step-by-Step Process:

1. Input Ingestion & Preprocessing

- Audio Transcription: Speech-to-text applied to call recordings

- Speaker Diarization: Differentiates between agent and customer

- Data Redaction: Sensitive data (e.g., names, credit card info, PHI) is removed

- Data Retention: Zero transcription data retention, and data is not used for training AI models

- Transcription Quality: GenAI transcriptions are analyzed to ensure they meet SQM’s quality standards for assessing CSAT and compliance performance

2. Intent & Behavior Detection

- Natural Language Understanding (NLU): Detect intents (like empathy or resolution) and behaviors relevant to QA scoring

- Sentiment Analysis: Captures the emotional tone of both parties

- Behavioral Tagging: Detects QA-relevant behaviors like empathy, greeting, knowledge, and listening

3. Scoring Methodologies

- Rule-Based Rubric Logic: A standardized approach to assess call quality by measuring key behaviors like communication, compliance, and issue resolution

- Large Language Models: LLM (i.e., OpenAI) are used to evaluate CX and compliance adherence (YOUR QA DATA IS NOT USED FOR AI MODELING)

- CSAT Prediction: Proprietary post-call CSAT prediction QA models leverage 10 key components to accurately predict agent QA CSAT scores, achieving up to 95% accuracy match with survey-based agent CSAT scores.

- Call Calibration: Post-call surveys (i.e., phone or email) are used to calibrate GenAI CX results using your contact center, rather than using QA evaluators.

4. Aggregation & Benchmarking

- Metric Consolidation: Grouped by categories (CX, compliance, repeat calls)

- Composite QA Score: Is based on the weighting and scores of the 22 CX QA metrics

- Benchmarking: Performance compared across peers, industry average, and world class contact centers.

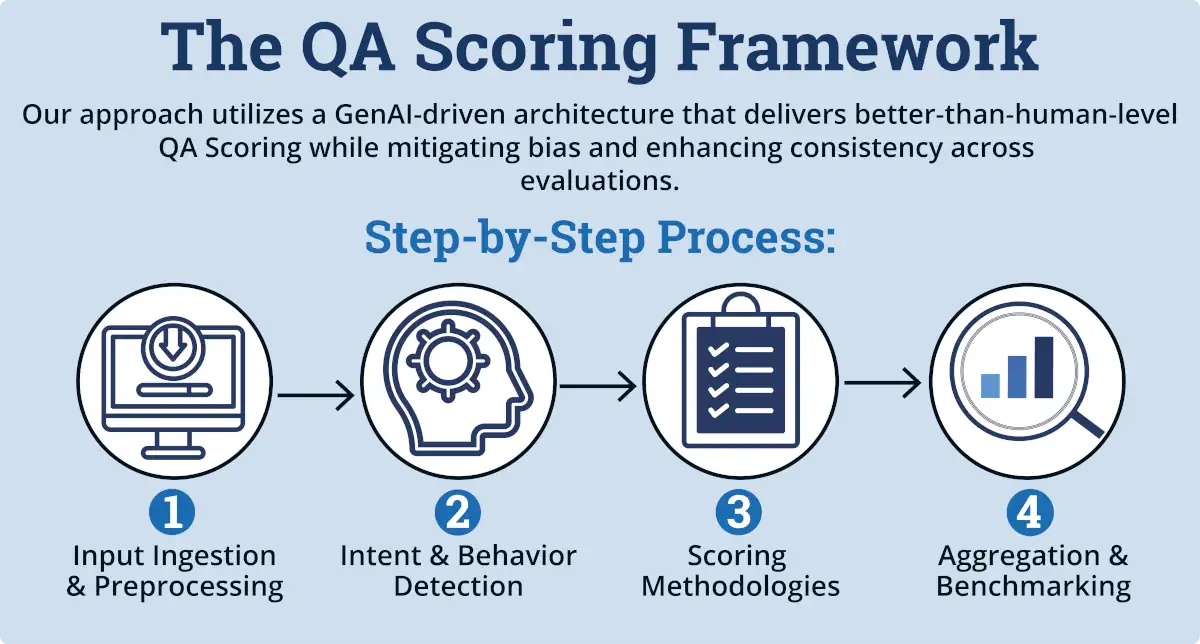

Contact Center CSAT/QA Benchmark Snapshot Outcome

The CSAT/QA Benchmark Snapshot outcome provides a comprehensive benchmark comparison against over 500 North American call centers—covering CX, compliance, repeat call metrics, error sources, transcription quality, GenAI CSAT predictions, and recognition through SQM’s Excellence Awards.

- Benchmark comparison of your CSAT/QA results to over 500 leading North American call centers

- Benchmarking comparison to peer groups, the call center industry, and world class call centers

- Transcription quality score and benchmark comparison to other participants

- GenAI agent CSAT score prediction matches to survey agent CSAT ratings

- 4 Outcome CX metrics (i.e., call center CSAT, agent CSAT, FCR, and call resolution) results

- 22 CX metrics (e.g., Understanding, Empathy, Helping, and Resolving) results

- 23 Call compliance metrics (e.g., caller verification, script adherence, resolution disclosure) results

- 39 Repeat call metrics (e.g., billing/claim issue, agent approach, agent knowledge, customer mistake) results

- 3 Sources of error (i.e., customer, agent, and company) results

- Recognition opportunity for contact centers that have demonstrated CSAT/QA excellence is eligible for an SQM award

Key Benefits

Our CSAT/QA Benchmark Snapshot key benefits provide rapid deployment, calibration for accuracy, disruption-free deployment with independent validation, actionable insights, and industry-wide benchmarking—plus the opportunity to earn recognition through awards and certification for world-class CX delivery.

- Rapid Deployment, Minimal Disruption: No system integration is required; we start within days and have results within two weeks.

- Calibration for Accuracy: SQM leverages post-call surveys to validate and fine-tune GenAI QA results.

- Third-Party Validation: Gain objective insights to complement or challenge internal and external QA results.

- Actionable Recommendations: Use findings to guide coaching, tech investments, or process improvements.

- Comprehensive Benchmarking: Measure performance across industry verticals (e.g., Banking, Telecom, Healthcare, Insurance) and world-class contact centers in North America.

- Awards & Certifications: Companies that have demonstrated high QA and CSAT results will be awarded and/or certified as World Class companies for CX delivery.